Environment

To demonstrate the capabilities of general robot policies I chose a simulation environment based on A Low-cost Open-source Hardware System for Bimanual Teleoperation [1]. One of its five design principles is versatility - it can be applied to a wide range of fine manipulation tasks with real-world objects. This makes a perfect playground for demonstrating generalizabilty.

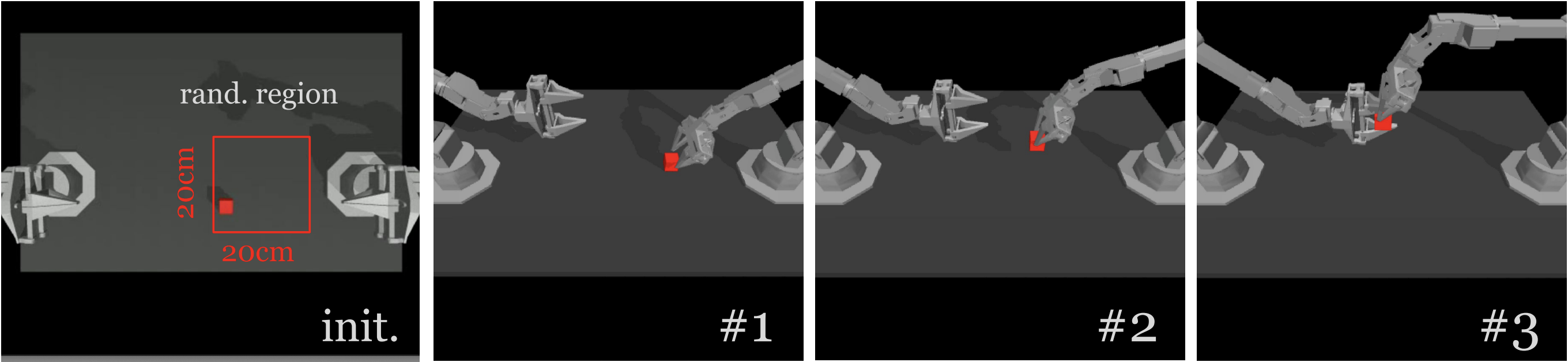

Task Definition

For the simulated Transfer Cube task, the right arm needs to first pick up the red cube lying on the table, then place it inside the gripper of the other arm. Due to the small clearance between the cube and the left gripper (around 1cm), small errors could result in collisions and task failure [1].

Finetuning

I believe that deploying robot learning algorithms to real-world tasks will require finetuning to specific tasks (at least for now). In this demo I am finetuning Octo which was mainly trained on single arm robot data using image observations and end-effector positions as the action space [2][3]. For the ALOHA simulation environment the new observation space consist of a single camera and the robot's proprioception data. The new action space is joint control for bimanual interaction.

Policy

For the control policy I am deploying Octo - a widely applicable generalist policies for robotic manipulation [3]. The Octo model is a transformer-based diffusion policy, pretrained on 800k robot episodes from the Open X-Embodiment dataset [2]. It supports flexible task and observation definitions and can be quickly finetuned to new observation and action spaces. The Octo Model Team introduced two initial versions of Octo, Octo-Small (27M parameters) and Octo-Base (93M parameters).

Dataset

I am using the simulated ALOHA cube handover dataset aloha_sim_cube_scripted_dataset. It includes 48 expert demonstrations. The dataset can be found here: https://rail.eecs.berkeley.edu/datasets/.

Training

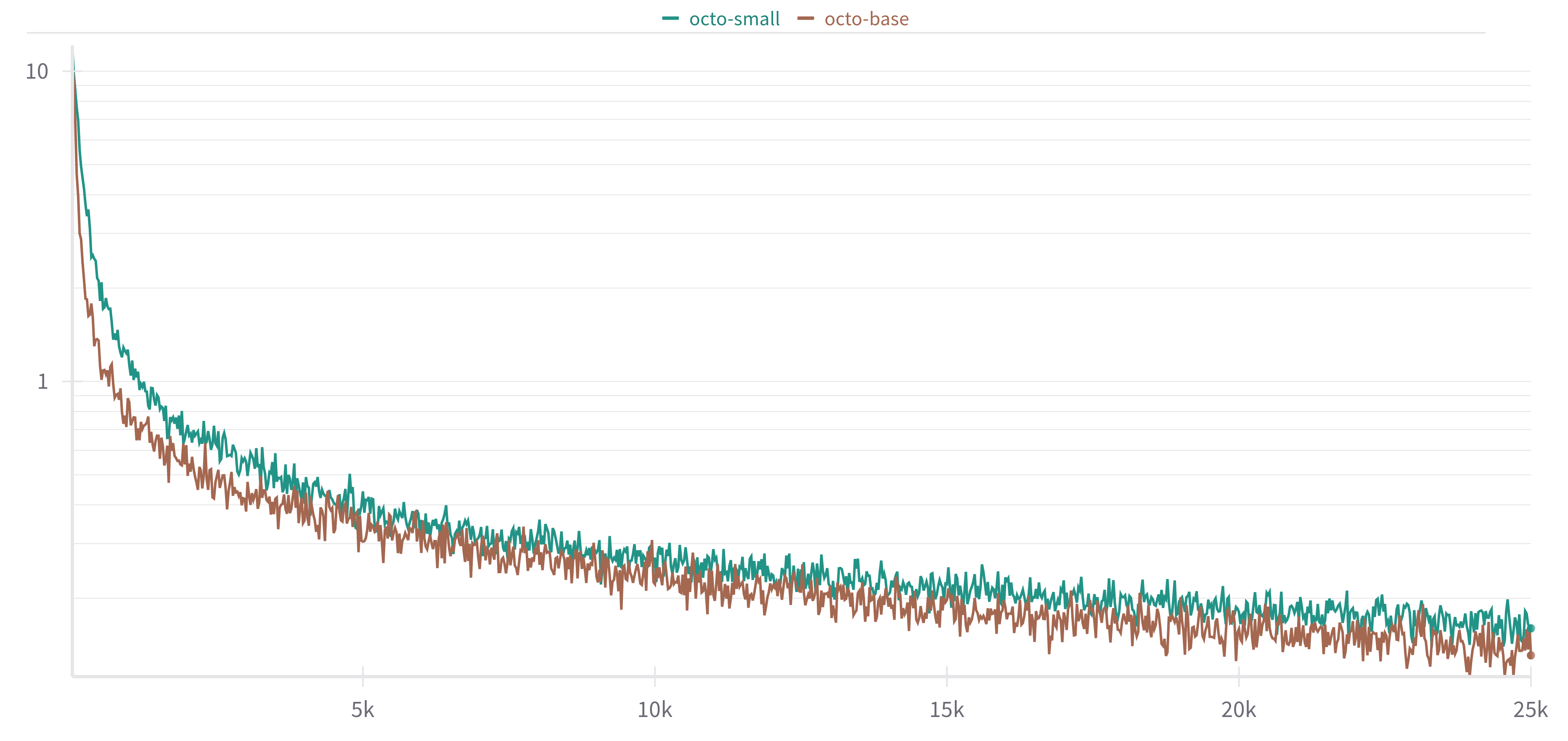

The model was trained for 25000 steps with a batch size of 128 (Octo-Small) and 64 (Octo-Base) using a TPU v3-8 pod, which took around 3 hours and cost around $20 per run. The training history can be found here: https://wandb.ai/j4nn1k/octo-finetuning.

Results

I evaluated the finetuned model on my desktop computer which used a RTX 3070 for inference. With Octo-Small I was able to achieve a success rate of around 75%.

Conclusion

In this project I demonstrated how finetuning can be used to adapt a generalist control policy to a new environment. With only 48 demonstrations I was able to tune the control policy to a new observation (top camera image and proprioception) and action space (bi-manual joint control).

References

[1] Learning Fine-Grained Bimanual Manipulation with Low-Cost Hardware introduces ALOHA and ACT. ALOHA is a low-cost open-source hardware system for bimanual teleoperation. ACT (Action Chunking with Transformers) predicts a sequence of actions ("an action chunk") instead of a single action like standard Behavior Cloning.

[2] Open X-Embodiment: Robotic Learning Datasets and RT-X Models introduces the largest open-source real robot dataset to date.

[3] Octo: An Open-Source Generalist Robot Policy introduces an ongoing effort for building open-source, widely applicable generalist policies for robotic manipulation. The Octo model is a transformer-based diffusion policy, pretrained on 800k robot episodes from the Open X-Embodiment dataset.